Creating a Cleaner Semantic Data Model for XBRL

Posted Wednesday, February 18By Scott Theis, President/CEO, Novaworks; Chair, XBRL US Domain Steering Committee (DSC); Leader, XBRL Technical Advisory Committee for XBRL US (XTAC); Member, XBRL Standards Board and OIM Working Group.

In our last blog, Modernizing XBRL, we explored why the time is right to simplify and modernize the XBRL technical specification. One of the strongest forces driving this modernization is the rapid rise of artificial intelligence. Structured data consistently outperforms unstructured formats in machine‑learning environments, a point underscored by a Suffolk University study. That study compared LLM performance on financial data presented in XBRL, plain text, and HTML. XBRL delivered dramatically lower error rates across both the face financials and the notes. We’ll discuss more on this study later in this blog.

But structure alone isn’t enough. AI systems perform best when the underlying data model is clean, predictable, and easy to interpret. The semantic model (the taxonomy) is the key to how machines understand reported facts. As AI becomes a standard tool for analysts, regulators, investors and data scientists, the clarity of that semantic model becomes just as important as the data itself.

The Problem with XML

XBRL 2.1 was built on XML because XML was the state‑of‑the‑art technology at the time. But XML brings complexity: verbose syntax, rigid structural rules, multifile architecture, and a proliferation of identifiers that make the semantic model harder for both humans and machines to digest. While XML is excellent for strict validation and regulatory precision, it is not optimized for modern AI workflows. In addition, after 25 years of XBRL implementation, we’ve learned a lot about the methods of representing models, enough that improving presentations (aka cubes) and simplifying foundational structures such as datatypes is long overdue.

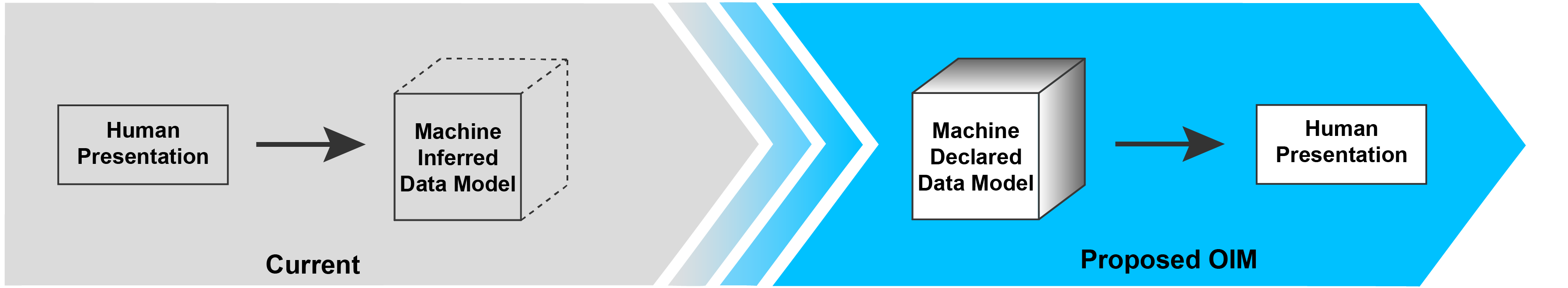

For example, in the present system, the data model is inferred from the presentation. What we are proposing with the Open Information Model (OIM) is a cube model that declaratively defines the structure of the data.

Proposed requirements for the new XBRL specification are now out for public review. This document outlines the goals as well as the general requirements for the next generation of XBRL. It is critical that the requirements be comprehensive to serve the future of XBRL worldwide. Download the proposed XBRL Taxonomy Model Requirements XBRL.

The complexity of XBRL 2.1 becomes a barrier when LLMs or machine‑learning systems attempt to interpret the taxonomy because the data model is inferred, not declared. Messy or overly complex structures increase hallucination risk, slow down processing, increase the potential to miss or even amplify errors, and require more computational power. Anyone who has experimented with AI tools knows the frustration of feeding them multifile, deeply nested, or syntactically heavy data — initial answers may be incomplete or incorrect, and repeated prompting is often required to get a reliable result.

The Proposed Solution

Work on the new OIM taxonomy makes significant improvements and simplification to XBRL 2.1 while also providing a path forward by separating XBRL’s semantics from syntax. This shift allows the taxonomy to be expressed as an object model so that it can be easily consumed by AI. JSON is not the goal in itself; the goal is a syntax‑independent semantic model that is clean, consistent, and easy for both humans and machines to understand. But JSON is the natural first choice for expressing that model because it aligns with how modern AI systems, data pipelines, and developers work. JSON is lightweight, object‑oriented, and natively parsed by virtually every contemporary programming environment. However, JSON will not provide the actual model. That’s where we come in.

The proposed OIM Taxonomy Requirements will remove the complexity inherent when the data model is inferred; by defining the data model and structure declaratively, machines will be able to interpret the data more consistently regardless of syntax and then express that data in human-readable formats. Further, the proposed OIM will consolidate many XML‑specific identifiers into a single, simple standard identifier and reduce the multi‑file structure of XBRL 2.1 into a streamlined, single‑file model. This will dramatically improve readability and reduce the cognitive load for AI systems. When an LLM can directly access a clean, unified semantic model, it can interpret reported facts more accurately and with far less computational overhead.

In short: a new OIM taxonomy will provide a cleaner semantic model. JSON is the best first expression of that model.

If AI Can Extract Data, Why Use XBRL?

The above is the paramount question. There is a common expectation that AI will be the “be-all end-all” for data creation and analysis. Perhaps we’ll get there, but as the saying goes: there’s no such thing as a free lunch.

I am sure many readers have experimented with AI for financial information. More formally, this was demonstrated in the study referenced earlier that was conducted by Suffolk University, AI determinants of success and failure: The case of financial statements, that tested the performance of LLMs with data formatted in XBRL, in text, and in HTML. By far, XBRL was the winner with significantly lower error rates on data from both the face financials and the notes. This was also shown in a presentation, XBRL in the Age of AI, by the University of Wisconsin Whitewater at the XBRL US Data Standards Forum, December 2025. This topic was also explored in detail by several speakers at the Digital Reporting in Europe in June 2025, including the session Feeding AI Right: XBRL vs. Unstructured Data which describes how structured data powers reliable intelligence.

So, the conclusion: XBRL significantly improves accuracy for AI, but we must improve XBRL through better context and clearer data representation.

What’s Next

The time is right for making improvements to the representation of the sematic data for XBRL because of the AI revolution and the increasing demand for structured high-quality data.

XBRL’s global success provides a strong foundation for this next phase. As artificial intelligence becomes a standard tool for financial analysis, regulators and data scientists will increasingly rely on clear, accessible semantic models and cleanly structured data. We believe the development of the new OIM taxonomy positions XBRL for this future by modernizing the taxonomy layer and enabling syntax‑independent modeling that works well with today’s AI technologies.

We’re building on a great legacy platform and preparing for the next decade of innovation. By decoupling meaning from markup and embracing modern data structures, XBRL can remain the global standard for digital reporting while becoming far more compatible with the tools shaping the future of data intelligence.

Stay tuned. The evolution of XBRL is accelerating, and the shift toward a cleaner, syntax‑independent semantic model is just beginning.

Get involved. Join XBRL US’ XBRL Technical Advisory Working Group (XTAC) - email info@xbrl.us to learn how. Join the XBRL International OIM Spec Group - email info@xbrl.us.

Ask questions. Use the OIM Specification Forum Discussion - if you have a question or comment, it's likely someone else does, too.

Comment

You must be logged in to post a comment.