By Campbell Pryde, President and CEO, XBRL US

Since 2015, the Data Quality Committee (DQC) of XBRL US has been creating freely available validation rules that provide clearly defined guardrails for corporate issuers to follow. The automated standardized rules give issuers the tools to identify and resolve inconsistencies and inaccuracies in their filings so that consistent, high quality financials can be produced from their XBRL data.

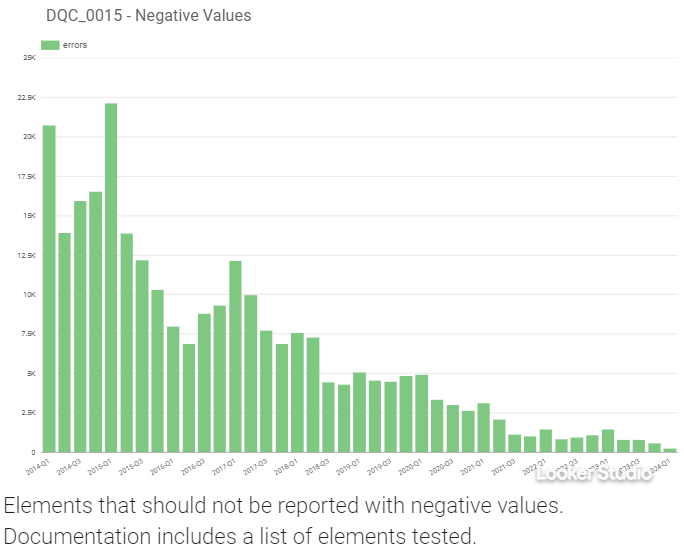

The DQC continuously measures the impacts of each rule on the number of errors over time and has quantitative evidence that the rules have been highly effective at reducing errors and provide a strong incentive for filing companies to prepare their XBRL formatting financials within the guardrails set by the DQC standards. The chart below illustrates the decline in one of the most common errors found in filings, negative value errors. Similar charts can be found for many other error types in the DQC's Aggregated Real-time Filing Errors details.

Ingredients for Success

The accomplishments of the DQC can be attributed to standardization, attention to detail, ease-of-use, and perhaps most importantly, collaboration.

Standardization and attention to detail

The DQC rules leverage the highly granular, XBRL structured data format. The ability to easily identify every characteristic of a data point, including data type, dimensional characteristics, time period and period type, makes it easy to design rules that pinpoint specific problems. Company errors could be based on using the wrong XBRL concept, reporting a value as negative when it should be positive, applying a dimensional concept incorrectly, or even assigning a date to a fact that does not align with the report date.

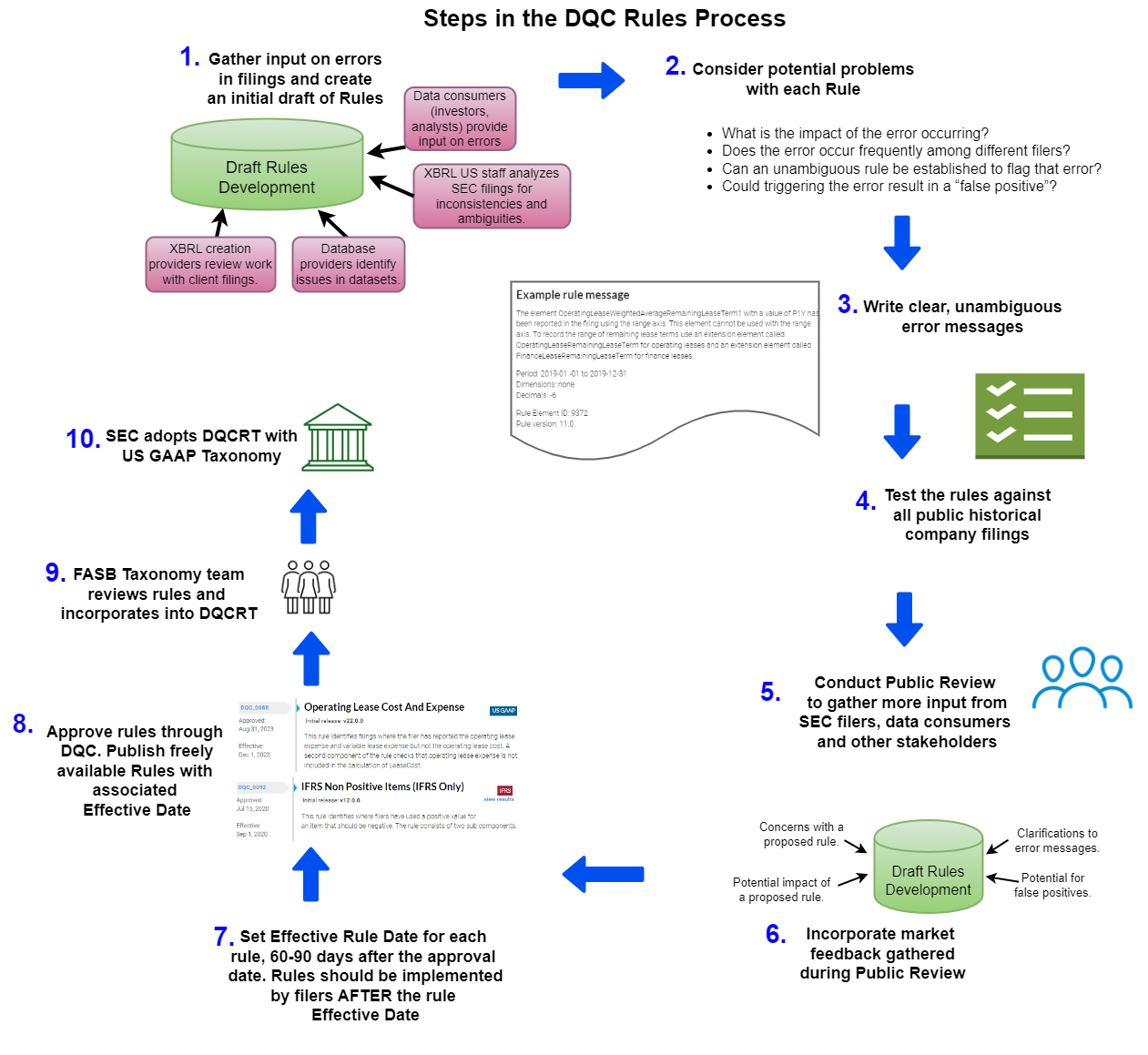

Each rule is painstakingly created and implemented through a 10-step process as shown below, designed to ensure that rules are thoroughly researched and can be consistently used by all registrants.

The rigorous process followed to build and implement the rules ensures that registrants can stay within the guardrails. For example, in Step 7, the DQC sets an “effective date” for each rule that tells issuers, data users, and vendors the exact date on which issuers should apply the rule. Usually the effective date is 60-90 days after the approval date.

In the period prior to the effective date, filers can begin planning to implement upcoming effective rules. DQC errors identified prior to the effective date do not necessarily imply a defect in the filing, and issues identified prior to the effective date should be reviewed and used only as an information point to provide feedback on the efficacy of the rule. No assessment should be made on compliance with DQC rules prior to this date. This is an important guardrail that gives reporting entities and data users a set point in time after which the new rule must be followed.

Ease-of-use

Guardrails can only be followed when they are clearly marked and easy to follow. Because XBRL data is machine-readable, rules can be run automatically without manual review.

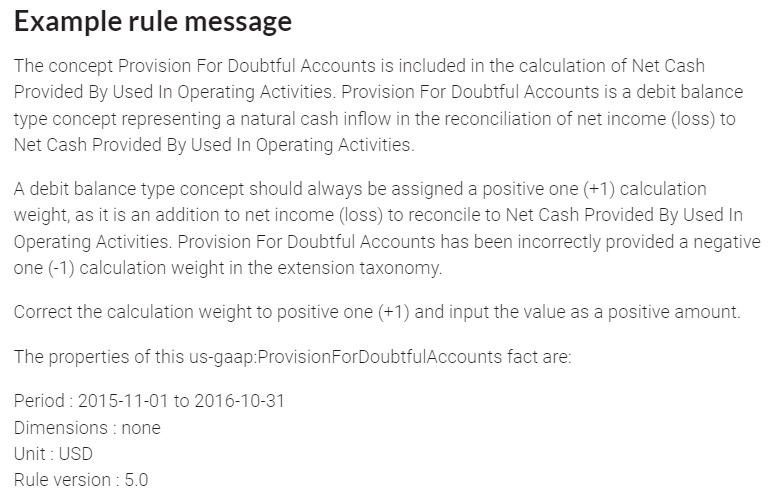

The DQC works hard to ensure that the rule message (see Step 3) is clearly written and unambiguous. Again, the granularity of XBRL data makes this possible because each datapoint can be identified. All the context needed to understand a fact, like period type, data type, time period and dimensional characteristics are embedded in the datapoint itself. The message example below alerts a filer that the date assigned to a specific fact occurs earlier than the report of the date and should be corrected. Unambiguous messaging not only ensures consistency of application but makes it easy for filers to adhere to the rules.

Collaboration

Members of XBRL US represent the majority of the public companies reporting financials each quarter to the SEC EDGAR system. Filing agents, tool providers, data analytics companies, and nonprofit entities are part of the consortium. Driven by a shared vision to improve the usability of XBRL data, they collaborate to set a baseline for quality in XBRL-reported financial data.

Not only do these dedicated organizations provide funding, they also actively work together to identify problems in filings and create the standardized rules that can be used to correct them. And most importantly, they work with their clients to ensure that the rules are consistently used by all issuers.

Expanding on success

Given the achievements of the rules at boosting data quality, the FASB in 2020 began conducting its own review of each rule set and incorporating them into a taxonomy called the DQC Rules Taxonomy, which is published along with the US GAAP Taxonomy each year.

The SEC EDGAR System supports the use of rules in the DQC Rules Taxonomy by informing issuers when one of the DQC rules is triggered by their filing.

The Center for Data Quality has made enormous strides. The ingredients of success all come down to standardization, attention to detail, collaboration, and a common cause.